- Download spark llap jar how to#

- Download spark llap jar driver#

Note that JARs and files are copied to the working directory for eachĪs noted, JAR files are copied to the working directory for each Worker node. That are pushed to each worker, or shared via NFS, GlusterFS, etc. No network IO will be incurred, and works well for large files/JARs

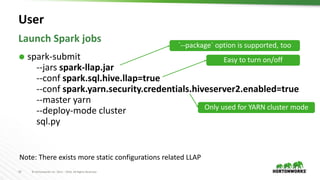

local: - a URI starting with local:/ isĮxpected to exist as a local file on each worker node. hdfs:, http:, https:, ftp: - these pull down files and JARs. Download spark llap jar driver#

file: - Absolute paths and file:/ URIs are served by the driver’s HTTPįile server, and every executor pulls the file from the driver HTTP. Spark uses the following URL scheme to allow different Included with the -jars option will be automatically transferred to When using spark-submit, the application jar along with any jars In "Submitting Applications", the Spark documentation does a good job of explaining the accepted prefixes for files: You have to manually make your JAR files available to all the worker nodes via HDFS, S3, or Other sources which are available to all nodes. This means the job isn't running directly from the Master node. You can see that when you start your Spark job: 16/05/08 17:29:12 INFO HttpFileServer: HTTP File server directory is /tmp/spark-48911afa-db63-4ffc-a298-015e8b96bc55/httpd-84ae312b-5863-4f4c-a1ea-537bfca2bc2bġ6/05/08 17:29:12 INFO HttpServer: Starting HTTP Serverġ6/05/08 17:29:12 INFO Utils: Successfully started service 'HTTP file server' on port 58922.ġ6/05/08 17:29:12 INFO SparkContext: Added JAR /opt/foo.jar at with timestamp 1462728552732ġ6/05/08 17:29:12 INFO SparkContext: Added JAR /opt/aws-java-sdk-1.10.50.jar at with timestamp 1462728552767Ĭluster mode - In cluster mode Spark selected a leader Worker node to execute the Driver process on.

This depends on the mode which you're running your job under:Ĭlient mode - Spark fires up a Netty HTTP server which distributes the files on start up for each of the worker nodes. If you want a certain JAR to be effected on both the Master and the Worker, you have to specify these separately in BOTH flags.

to set extra class path on the Worker nodes. or it's alias -driver-class-path to set extra classpaths on the node running the driver. There are a couple of ways to set something on the classpath: It is definitely important to keep in mind.ĬlassPath is affected depending on what you provide. The poster does make a good remark on the difference between a local driver (yarn-client) and remote driver (yarn-cluster). I found a nice article on an answer to another posting. driver-library-path additional1.jar:additional2.jar \

Would it be safe to assume that for simplicity, I can add additional application JAR files using the three main options at the same time? spark-submit -jar additional1.jar,additional2.jar \ If I were to guess from documentation, it seems that -jars, and the SparkContext addJar and addFile methods are the ones that will automatically distribute files, while the other options merely modify the ClassPath. I hope that it is not all that complex, and that someone can give me a clear and concise answer. However, that left for me still quite some holes, although it was answered partially too.

Download spark llap jar how to#

I am aware where I can find the main Apache Spark documentation, and specifically about how to submit, the options available, and also the JavaDoc.

not to forget, the last parameter of the spark-submit is also a. If copied into a common location, where that location is (HDFS, local?). type of URI accepted: local file, HDFS, HTTP, etc. for the remote Driver (if ran in cluster mode).

If provided files are automatically distributed.Separation character: comma, colon, semicolon.The following ambiguity, unclear, and/or omitted details should be clarified for each option: including duplicating JAR references in the jars/executor/driver configuration or options.

However, there is a lot of ambiguity and some of the answers provided.

0 kommentar(er)

0 kommentar(er)